First, single NAT. In this scenario, you manually create a NAT translation. In most cases, you have a private network using IP addresses defined in RFC-1918 on the inside of your firewall. Since this address space is not routable on the public Internet, you must rewrite the source address of any packet originating on your private network if you want to reach a host outside of your network. Likewise, if you have a server inside your private network that should be reachable from the Internet, incoming packets must have the destination IP address rewritten as they cross your firewall. This is pretty straightforward on the ASA. Given the following interface configuration...:

interface GigabitEthernet0.10

description INSIDE

vlan 10

nameif INSIDE

security-level 100

ip address 192.168.1.1 255.255.255.0

!

interface GigabitEthernet0.300

description OUTSIDE

vlan 300

nameif OUTSIDE

security-level 0

ip address 100.64.1.53 255.255.255.248

!

Next, we will define the client PC's "real" and "mapped" IP addresses (the IP address actually configured on the client, and the IP address that Internet hosts will see, respectively):

object network CLIENT

host 192.168.1.3

object network CLIENT-OUTSIDE

host 100.64.1.129

Finally, we create the NAT statement to translate the client's real IP to the mapped IP:

nat (INSIDE,OUTSIDE) source static CLIENT CLIENT-OUTSIDE

Now, when the client tries to reach a host on the public Internet, the firewall will rewrite incoming and outgoing packets as described above. To test this, I created a simple CGI script on a web server that displays the "environment variables" passed to the CGI in an HTTP session:

As you can see, the web server sees the client PC's IP address as 100.64.1.3 (okay, yes, technically, that is not a publicly routable IP address either, but is instead a "Carrier-grade NAT" address, a special private IP space address, but even in my labs in GNS-3, I don't like to use real public IP address space). Pretty simple, right?

Next up, Auto NAT (or Object-NAT, IIRC -- if I'm wrong, please leave a comment below!). Object-NAT looks very simple, and in many on-line tutorials I've seen, is described as one of the simplest ways of setting up NAT on a firewall. However, I have found it to be quite finicky, at least on the version of ASA code that I'm using in GNS3. More than once, I have copied an existing config, changing IP addresses and object names as required, but finding one config works while the other does not. Even more frustrating, the ASA code that I'm using in GNS3 is much older than the code I'm using in the real world, and the object-NAT configuration is slightly different between the two versions. Despite these differences, let's give object-NAT a try.

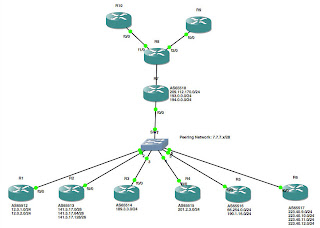

For the object-NAT example, we'll use the following network:

...and the following network objects:

1. The Knoppix Clone's real (RFC-1918) IP address:

object network CLONE-REAL-IP

host 192.168.1.2

!

2. The Knoppix Clone's NAT (i.e., "public") IP address:

object network CLONE-NAT-IP

host 100.64.1.2

!

3. The Knoppix Host's real (RFC-1918) IP address:

object network K32-REAL-IP

host 10.0.0.2

!

4. The Knoppix Host's NAT IP address:

object network K32-NAT-IP

host 100.64.0.2

!

The Knoppix Clone will be on our "inside" network, and Knoppix-32 will be on the "public" network. Yes, I know -- I am using private IP addresses for everything, but just play along, okay? ;) Auto-NAT is sometimes called Object-NAT because the NAT statement exists inside of an "object network ..." statement:

object network CLONE-NAT-IP

nat (Side_B,Side_A) nat (SIDE_B,SIDE_A) source dynamic CLONE-REAL-IP CLONE-NAT-IP

!

Just for giggles, try this after setting up the object NAT shown above:

sho run | begin CLONE-NAT-IP

Most likely, you saw something like this:

object network CLONE-NAT-IP

host 100.64.1.2

object network K32-REAL-IP

host 10.0.0.2

object network K32-NAT-IP

host 100.64.0.2

...

Wait a minute...what happened to our NAT statement? Well, if you keep scrolling, you'll see it somewhere down near the end of the config. For whatever reason, Cisco decided it made sense to break the object definition in two, putting the host (or subnet or range or...) portion in one place in the config and the NAT portion in another </shrug> Don't ask me; I don't know why, either.

In any case, now that the NAT rule has been created, let's try to ping the Knoppix Clone from the Knoppix Host:

Side_A pings Side_B:

ping 100.64.1.2

Side_A tcpdump:

10.0.0.2 > 100.64.1.2

10.0.0.2 > 100.64.1.2

Side_B tcpdump:

...nothing...

Next, we'll try pinging from the Knoppix Clone to the Knoppix Host:

Side_B pings Side_A:

ping 10.0.0.2

Side_A tcpdump:

100.64.1.2 > 10.0.0.2

10.0.0.2 > 100.64.1.2

Side_B tcpdump:

192.168.1.2 > 10.0.0.2

10.0.0.2 > 192.168.1.2

Did that make sense? Because this NAT translation only allows traffic to originate on the inside network (established, related packets will always be allowed back in with a stateful firewall), the Knoppix Host cannot ping the Knoppix Clone, but the Knoppix Clone can ping the Knoppix Host.

Things really get interesting, however, with Twice NAT. In this situation, not only do you want to rewrite the IP address of a system on the INSIDE network, but you also want to rewrite the IP address of a system on the OUTSIDE network as well. Why? Well...I don't know. But if for some reason you do, here's how to do it :)

We'll use the same ASA interface configuration that we used in the discussion of Single NAT above.

Next, we'll define the objects used in this config. As in the Single NAT and Auto NAT discussion, we'll use "CLIENT" to mean the host on the inside network and "CLIENT-OUTSIDE" to mean the NAT'd IP address of the client host (the IP that outside hosts see the request coming from). However, we will create two new objects, "MAPPED-DEST" which is the IP address that CLIENT will use in URL's, ping commands, etc. and "REAL-DEST" which is the actual IP address of the host on the OUTSIDE network. In other words, just as "CLIENT" refers to the actual IP address configured on the host on the INSIDE network, "REAL-DEST" refers to the actual IP address configured on host on the OUTSIDE network. Likewise, just like "CLIENT-OUTSIDE"refers to the IP address that OUTSIDE hosts use to reach the INSIDE network, "MAPPED-DEST" refers to the IP address that INSIDE hosts use to reach the OUTSIDE network:

object network MAPPED-DEST

host 172.16.0.1

object network REAL-DEST

host 100.64.1.3

Finally, we create the NAT statement to set up the Twice NAT:

nat (INSIDE,OUTSIDE) source static CLIENT CLIENT-OUTSIDE destination static MAPPED-DEST REAL-DEST

The only difference between this config and the Single NAT config is the addition of "destination static MAPPED-DEST REAL-DEST" at the end of the NAT statement. On the CLIENT computer, we can now try to access the server on the OUTSIDE network using the IP address 172.16.0.1:

As you can see, we are accessing the web server on the outside network by using the IP address 172.16.0.1, even though the IP address on the server is actually 100.64.1.3. Likewise, the web server sees the request coming from 100.64.1.129, even though the client's IP address is actually 192.168.1.3. In other words, we are NAT'ing both the INSIDE and OUTSIDE networks.

For our last example, we'll set up identity-NAT. I have to admit, this twist on NAT really threw me when I first encountered it. "Wait, you mean to tell me that we are creating a NAT rule to rewrite an incoming packet to use the EXACT SAME IP address it originally had?!?!" At first, it does seem a little silly, doesn't it? However, consider this scenario: you have a firewall with an inside and outside network, and you have an object-NAT rule to translate outgoing requests to a pool of publicly routable IP addresses. However, for some reason, there is a host or subnet connected to your outside interface that you wish to access via your private, inside IP addresses. For example, it is possible that in a large enterprise network, you might have firewalls between various departments inside the company. These firewalls might do the NAT translations from RFC-1918 addressing to public addressing, but you might still want to access a resource in another department via your private IP range (that's kinda-sorta the situation with one of the ASA's I currently manage). In this case, the identity-NAT rule will override the object-NAT rule for the objects that you specify. In terms of configuring identity-NAT, it's pretty much the same as single-NAT, except that you use the same source object and destination object in the NAT rule:

object network CLIENT

host 192.168.1.3

object network CLIENT-OUTSIDE

host 100.64.1.129

!

nat (INSIDE,OUTSIDE) source static CLIENT CLIENT

No, that's not a typo -- I really did mean "CLIENT CLIENT" in the NAT rule. Essentially, we are saying, "When a packet comes into the INSIDE interface with the IP address 192.168.1.3, rewrite the packet exiting the OUTSIDE interface with the source IP address of 192.168.1.3. This works because the ASA follows a hierarchy much like the mathematical concept of "order of operations" to determine at what point the various types of NAT will be performed. In this case, the identity NAT will happen after the object-NAT, so the identity-NAT rule will override the object-NAT.